Efforts to systematically anticipate when and where crimes will occur are being tried out in several cities. The Chicago Police Department, for example, created a predictive analytics unit last year.

But Santa Cruz’s method is more sophisticated than most. Based on models for predicting aftershocks from earthquakes, it generates projections about which areas and windows of time are at highest risk for future crimes by analyzing and detecting patterns in years of past crime data. The projections are recalibrated daily, as new crimes occur and updated data is fed into the program.

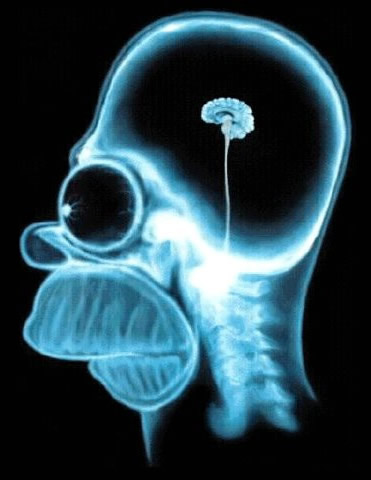

The bot, called LIDA for Learning Intelligent Distribution Agent, is based on "global workspace theory". According to GWT, unconscious processing - the gathering and processing of sights and sounds, for example, is carried out by different, autonomous brain regions working in parallel. We only become conscious of information when it is deemed important enough to be "broadcast" to the global workspace, an assembly of connected neurons that span the brain. We experience this broadcast as consciousness, and it allows information to be shared across different brain regions and acted upon.

Recently, several experiments using electrodes have pinpointed brain activity that might correspond to the conscious broadcast, although how exactly the theory translates into cognition and conscious experience still isn't clear.

...

To increase her chance of success, they grounded the timings of LIDA's underlying processes on known neurological data. For example, they set LIDA's feature detectors to check sensory memory every 30 milliseconds. According to previous studies, this is the time it takes for a volunteer to recognise which category an image belongs to when it is flashed in front of them.

Next the researchers set LIDA loose on two tasks. The first was a version of a reaction-time test in which you must press a button whenever a light turns green. The researchers planted such a light in LIDA's simulated environment, and provided her with a virtual button. It took her on average 280 milliseconds to "hit" the button after the light turned green. The average reaction time in people is 200 milliseconds, which the researchers say is "comparable".

A second task involved a flashing horizontal line that appears first at the bottom of a computer screen and then moves upwards through 12 different positions. When the rate that it shifts up the screen is slow, people report the line as moving. But speed it up and people seem to see 12 flickering lines. When the researchers created a similar test for LIDA, they found that at higher speeds, she too failed to "perceive" that the line was moving. This occurred at about the same speed as in humans. Both results have been accepted for publication in PLoS One.

"You tune the parameters and lo and behold you get that data," says Franklin. "This lends support to our hypothesis that there is a single basic building block for all human cognition." Antonio Chella, a roboticist at the University of Palermo in Italy and editor of the International Journal of Machine Consciousness agrees: "This may support LIDA, and GWT as a model that captures some aspects of consciousness."

After its Jeopardy! fame fades, Watson is going to get down to serious work. The IBM team led by computer scientist Dave Ferrucci is already deploying Watson in health care. The same way IBM fed Watson Wikipedia, the Bible, a geospatial database–the equivalent of a million pages of documents–it has begun to feed Watson electronic medical records, doctors’ notes, patient histories, symptoms, the USP Pharmacopeia. Here’s the amazing thing: The machine is getting faster at learning. Teaching it to play Jeopardy at a championship level took four years. Teaching it to deliver reasonably accurate answers to diagnostic questions took only four months. I can see IBM selling Watson as a Web-delivered service to doctors and hospitals seeking answers to a patient presenting with problems. Watson considers everything and creates evidence profiles (the types of information it relies on, weighted based on their reliability and utility) that feed into diagnoses graded on varying levels of confidence. These can be offered up as charts on an iPad showing a doctor Watson’s thought process. It’s like peering into the mind of a House, M.D. The doctors make the final call but they can assess possibilites they may not have seen and can click right to source material used to compile Watson’s answers. This is powerful stuff.

The genome is not the program; it's the data. The program is the ontogeny of the organism, which is an emergent property of interactions between the regulatory components of the genome and the environment, which uses that data to build species-specific properties of the organism.

The average human’s resting heat dissipation is something like 2000 kilocalories per day. Making a rough approximation, assume the brain dissipates 1/10 of this; 200 kilocals per day. That works out (you do the math) to 9.5 joules per second. This puts an upper limit on how much the brain can calculate, assuming it is an irreversible computer: 5 *10^19 64 bit ops per second.

Considering all the noise various people make over theories of consciousness and artificial intelligence, this seems to me a pretty important number to keep track of. Assuming a 3Ghz pentium is actually doing 3 billion calculations per second (it can’t), it is about 17 billion times less powerful than the upper bound on a human brain. Even if brains are computationally only 1/1000 of their theoretical efficiency, computers need to get 17 million times quicker to beat a brain.

The model of the Antikythera mechanism shown here is one of the clearer I've seen. I'm fascinated by analog computers, but figure we need an entirely new category for "organic" models.

Ray Kurzweil is working on another book, this one to explore the principles of human level intelligence in machines. Titled How the Mind Works and How to Build One, the new book will explore all the amazing developments in reverse engineering the brain that have come along since his last book, the Singularity is Near was released in 2005. Whether or not you agree with Ray Kurzweil’s predictions, the inventor and author stands out as one of the foremost futurists of our time.

Just a note to remind myself...

Source: National Institute of Standards and Technology Benchmark Test History

“I’m sorry, Dave. I can’t do that.”

In 2001 recognition accuracy topped out at 80%, far short of HAL-like levels of comprehension. Adding data or computing power made no difference. Researchers at Carnegie Mellon University checked again in 2006 and found the situation unchanged. With human discrimination as high as 98%, the unclosed gap left little basis for conversation.

By combining the old rule-based systems with insights from the new probabilistic systems, Goodman has found a way to model thought that could have broad implications for both AI and cognitive science.